A simple and user friendly way to track system health status which is easier to modify and implement is using 'health-check' script. This uses native Linux commands and generates a simple text report and sends it to the required users on regular intervals. Yes, in this blog post I'm going to demonstrate on making a script to run as a cron job which generates logs and further there is log rotation policy which is being implemented. The log rotation would take care of compressing and removing old log files. This controls the disk usage of such logs.

System Environment

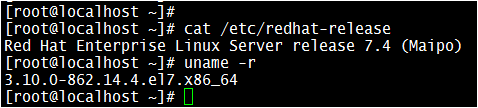

A virtual system installed with RHEL7.4 running on a VMWare Workstation 11 is being used for this demonstration.

Let's Start!

Let's Start!

I'm going to use the 'health-check.sh' script which I had created in one of my earlier blog posts which can be accessed using the below link:

This script 'health-check.sh' file is a simple, yet native and runs faster on any Linux variants. For more details, please visit the above blog link and also to download the script file. One could modify the script as required according to their requirements.

I've copied/placed the script file under root as shown below and made it executable :

[root@localhost ~]# ls -l /health-check.sh

-rwxr-xr-x. 1 root root 7621 Jan 5 18:40 /health-check.sh

This is the main script file which generates the health report of the system and dumps into a terminal. A small change is required to be made in this script file. Please edit the 'health-check.sh' file and change the value of variable 'COLOR' to 'no' which is defined at the beginning of the script file.

Step 2: Create another script which calls the main script file

Let's create a simple script file called 'health-check-gen.sh' as shown below and make it executable :

[root@localhost ~]# ls -l /health-check-gen.sh

-rwxr-xr-x. 1 root root 104 Jan 5 19:33 /health-check-gen.sh

The file contents should be as given below:

[root@localhost ~]# cat /health-check-gen.sh

#!/bin/bash

REPORTDATE="$(date +%d-%m-%y-%H%M)"

/health-check.sh 1> /var/log/health-report/health-check-report-$REPORTDATE.txt 2> /dev/null

echo -e "System Health Check Report For: $REPORTDATE" \

| mailx -a /var/log/health-report/health-check-report-$REPORTDATE.txt -s \

'System Health Check Report Attached' root@localhost

A screen shot of the 'health-check-gen.sh' file:

-- This script runs 'health-check.sh' script file as specified.

-- Stores the output in a text file in the folder '/var/log/health-report/' and file name is in the format 'health-check-report-(%d-%m-%y-%H%M).txt'.

-- Alternatively, an email would gets triggered and sent to the specified user with report file as an attachment.

The reason why the 'health-check.sh' being called inside another script file (health-check-gen.sh) is for portability i.e to keep the core script file separate. Which is easier to make changes and maintain.

Step 3: Create a cron job

In this example, I've created the cron job to be run on once in every 5 minutes (just for demonstration purpose) as shown below:

[root@localhost ~]# crontab -l

*/5 * * * * /health-check-gen.sh

In case it is required to run this script every week, say for example, every week on Sunday at 0:00 hours, we could modify the cron job as shown below:

In case it is required to run this script every week, say for example, every week on Sunday at 0:00 hours, we could modify the cron job as shown below:

[root@localhost ~]# crontab -l

0 0 * * sun /health-check-gen.sh

So, change the cron job as required to be run.

Now, go to '/var/log/health-report/' folder and verify if the report has been generated successfully (you may need to wait at least 5 minutes time to get file created). Otherwise, just execute the '/health-check-gen.sh' script file which could create health check report file.

[root@localhost health-report]# ls -ltr

total 16

-rw-r--r--. 1 root root 4365 Jan 6 16:05 health-check-report-06-01-19-1605.txt

-rw-r--r--. 1 root root 4583 Jan 6 16:10 health-check-report-06-01-19-1610.txt

Now, verify if mail has been received by the root user as specified with given health check report data.

Yes, mail has got triggered with health check report file as attachments. One could view the mail contents and check if that is good enough.

Further, one could also verify if cron jobs are getting executed by examining '/var/log/cron' file and may see entries as shown below:

Jan 6 16:05:02 localhost CROND[1874]: (root) CMD (/health-check-gen.sh) Jan 6 16:10:01 localhost CROND[2005]: (root) CMD (/health-check-gen.sh)

Step 5: Create a log rotation rule

Let's create a log rotation rule which would check and rotate such logs weekly which otherwise would pile up and '/var' may run out of space. So, it is always recommended to implement log rotation.

Go to '/etc/rsyslog.d/' folder and create a file with the following contents:

[root@localhost ~]# cat /etc/logrotate.d/health-check

/var/log/health-report/health-check-report-* {

weekly

rotate 10

missingok

compress

maxage 90

}

This is how the rotation of these log files works:

→ These log files stored under '/var/log/health-report' folder gets checked & rotated once in a week.

→ It would be rotated only if there are more than 10 log files found, otherwise, it would get skipped.

→ If any file gets rotated then such log files would be compressed to save space and gets stored under the same

folder with extension as '.gz'

→ Any compressed files older than 90 days (compared with current date) gets removed automatically.

→ If any file gets rotated then such log files would be compressed to save space and gets stored under the same

folder with extension as '.gz'

→ Any compressed files older than 90 days (compared with current date) gets removed automatically.

* These parameters could be adjusted/changed as required.

4 comments:

This is a great article with lots of informative resources. I appreciate your work this is really helpful for everyone. Check out our website Australia's Best Physiotherapy Practice Management Software for more PPMP. related info!

This is a great article with lots of informative resources. I appreciate your work this is really helpful for everyone. Check out our website Joint and muscle probems doctor in Warangal related info!

I liked your work and, as a result, the manner you presented this content about Cloud Datacenter in Saudi Arabia.It is a valuable paper for us. Thank you for sharing this blog with us.

Very efficiently written information. Thanks for sharing an article like this. Please keep sharing! It service provider melbourne

Post a Comment